MiniBatchSparsePCA is a variant of Sparse Principal Component Analysis (SparsePCA) that allows for incremental training, making it suitable for large datasets. It is primarily used for dimensionality reduction.

The key hyperparameters include n_components (number of sparse components to extract), alpha (sparsity controlling parameter), and batch_size (the size of the mini-batches).

This algorithm is appropriate for tasks involving dimensionality reduction, particularly when working with large datasets.

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

from sklearn.decomposition import MiniBatchSparsePCA

import matplotlib.pyplot as plt

# generate a synthetic dataset

X, _ = make_classification(n_samples=100, n_features=10, random_state=1)

# split into train and test sets

X_train, X_test = train_test_split(X, test_size=0.2, random_state=1)

# create the model

model = MiniBatchSparsePCA(n_components=3, alpha=0.1, batch_size=10, random_state=1)

# fit the model on the training data

model.fit(X_train)

# transform the test data

X_test_transformed = model.transform(X_test)

# plot the transformed data

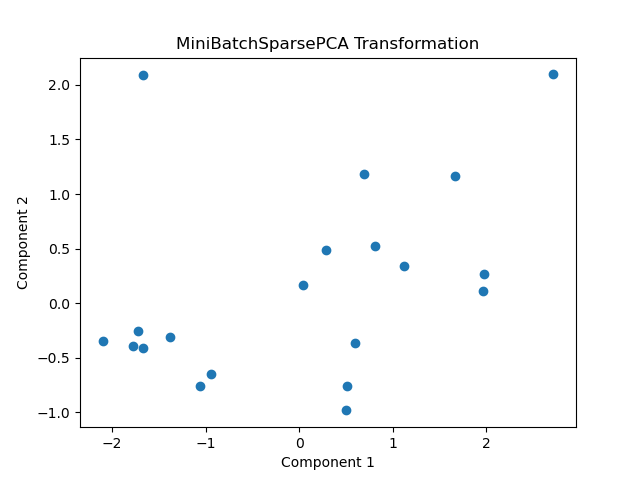

plt.scatter(X_test_transformed[:, 0], X_test_transformed[:, 1])

plt.xlabel('Component 1')

plt.ylabel('Component 2')

plt.title('MiniBatchSparsePCA Transformation')

plt.show()

Running the example gives an output like:

The steps are as follows:

Generate a synthetic dataset using

make_classification()to create a dataset with 100 samples and 10 features. The dataset is then split into training and test sets usingtrain_test_split().Instantiate a

MiniBatchSparsePCAmodel with 3 components, analphaof 0.1, and a batch size of 10. The model is fit on the training data using thefit()method.Transform the test data into the new component space using the

transform()method.Plot the first two components of the transformed test data to visualize the result of the dimensionality reduction.

This example demonstrates how to use the MiniBatchSparsePCA model for dimensionality reduction on large datasets, showcasing its ability to handle incremental training efficiently.