Performing Principal Component Analysis (PCA) on large datasets that do not fit into memory can be challenging. IncrementalPCA provides a solution by allowing PCA to be performed incrementally in mini-batches.

IncrementalPCA is a variation of PCA that processes data in chunks, making it suitable for large datasets. Key hyperparameters include n_components (number of principal components to keep) and batch_size (size of mini-batches). This algorithm is useful for dimensionality reduction on large datasets in classification, regression, clustering, and more.

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

from sklearn.decomposition import IncrementalPCA

import matplotlib.pyplot as plt

import numpy as np

# generate a large synthetic classification dataset

X, y = make_classification(n_samples=10000, n_features=20, random_state=1)

# split into train and test sets

X_train, X_test, _, _ = train_test_split(X, y, test_size=0.2, random_state=1)

# create IncrementalPCA model

n_batches = 10

ipca = IncrementalPCA(n_components=2)

# fit the model incrementally on mini-batches

for X_batch in np.array_split(X_train, n_batches):

ipca.partial_fit(X_batch)

# transform the dataset

X_train_ipca = ipca.transform(X_train)

X_test_ipca = ipca.transform(X_test)

# plot the transformed dataset

plt.scatter(X_train_ipca[:, 0], X_train_ipca[:, 1], c=y[:len(X_train_ipca)], cmap='viridis', edgecolor='k', s=20)

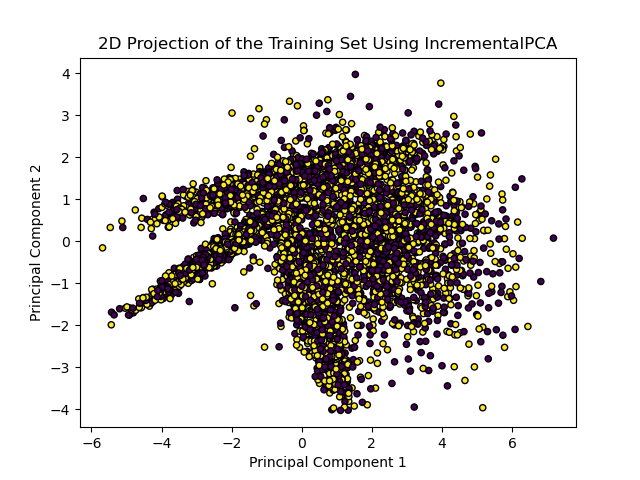

plt.title('2D Projection of the Training Set Using IncrementalPCA')

plt.xlabel('Principal Component 1')

plt.ylabel('Principal Component 2')

plt.show()

Running the example gives an output like:

The steps are as follows:

First, generate a large synthetic classification dataset using

make_classification(). This creates a dataset with a specified number of samples (n_samples) and features (n_features). The dataset is split into training and test sets usingtrain_test_split().Next, create an

IncrementalPCAmodel withn_componentsset to 2. The training data is then split into mini-batches, and the model is fit incrementally on each batch usingpartial_fit().The dataset is transformed using the fitted

IncrementalPCAmodel, resulting in a 2D projection of the data.Finally, visualize the 2D projection of the transformed training set using a scatter plot. Each point represents a sample, colored by its class label.

This example demonstrates how to use IncrementalPCA to perform PCA on large datasets that cannot fit into memory all at once. By processing data in mini-batches, IncrementalPCA enables efficient dimensionality reduction for large-scale machine learning tasks.