Dimensionality reduction is crucial for simplifying datasets while preserving essential information. FactorAnalysis helps in identifying the underlying relationships between variables.

The key hyperparameters of FactorAnalysis include n_components (number of factors to extract) and tol (tolerance for stopping criteria).

The algorithm is appropriate for exploratory data analysis, preprocessing before applying other machine learning algorithms, and visualization.

from sklearn.datasets import make_classification

from sklearn.decomposition import FactorAnalysis

import matplotlib.pyplot as plt

# generate a synthetic dataset

X, _ = make_classification(n_samples=100, n_features=10, random_state=1)

# create the FactorAnalysis model

model = FactorAnalysis(n_components=2)

# fit the model on the dataset

X_transformed = model.fit_transform(X)

# plot the transformed dataset

plt.scatter(X_transformed[:, 0], X_transformed[:, 1])

plt.xlabel('Factor 1')

plt.ylabel('Factor 2')

plt.title('Factor Analysis Transformation')

plt.show()

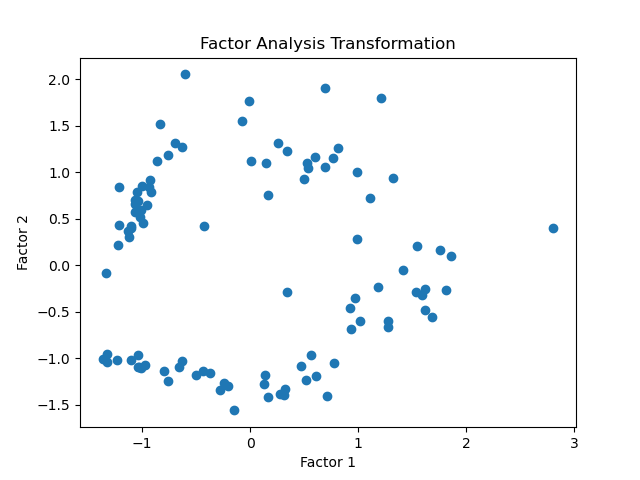

Running the example gives a plot like:

The steps are as follows:

First, a synthetic dataset is generated using the

make_classification()function. This creates a dataset with a specified number of samples (n_samples) and features (n_features) with a fixed random seed (random_state) for reproducibility.Next, a

FactorAnalysismodel is instantiated withn_components=2, specifying the reduction to two dimensions. The model is then fit on the dataset using thefit_transform()method.The transformed dataset is plotted using

matplotlibto visualize the reduction from 10 features to 2 factors.

This example demonstrates how to quickly set up and use FactorAnalysis for dimensionality reduction tasks, showcasing the simplicity and effectiveness of this algorithm in scikit-learn.

The model can be fit directly on the dataset to extract underlying factors, enabling its use in exploratory data analysis and preprocessing for more complex machine learning workflows.